Neural networks are able to generate plausible images by starting with a descriptive phrase written in natural language and then mining a large dataset of photos for the style.

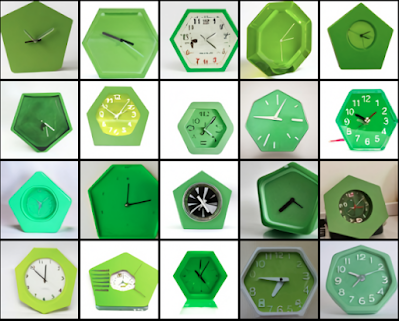

For example this array of images was created by the Dall-e algorithm using the prompt "pentagonal green clock."

They're all green, and they all have a sort of clock face with a long hand and a short hand. Those that have a numbered dial are mostly right.

But a second glance reveals that there are plenty of hexagons and heptagons too, and the spacing and symmetry seem off-kilter on a lot of them.

Here's a set of images generated from the phrase "A store front with the words 'Open AI" written on it.'

If you go to the Dall-e website, you can tweak the phrase and see what the machine comes up with. This one says "A neon sign that says 'acme.'"

Although it gets a few obvious things wrong, it's remarkable how the results pick up on incidental effects like the tubular nature of neon and the glow of the neon light on the colored background.

The developers say: "We find that DALL·E is sometimes able to render text and adapt the writing style to the context in which it appears. For example, 'a bag of chips' and 'a license plate' each requires different types of fonts, and “a neon sign” and “written in the sky” require the appearance of the letters to be changed. Generally, the longer the string that DALL·E is prompted to write, the lower the success rate. We find that the success rate improves when parts of the caption are repeated."

Try it yourself at the Open AI Blog about Dall-e. Be sure to scroll down and try some of the art styles, too.

Thanks, Joseph Santoyo

تعليقات

إرسال تعليق